On 12 September we will be announcing the winners of this year's ALPSP Awards for Innovation in Publishing. In this series of posts, we meet the finalists to learn a little more about each of them.

In this post, we hear from Josh Nicholson, co-founder and CEO of scite.ai

Tell us a little about your company

The idea behind scite was first discussed nearly five years ago in response to a

paper from Amgen reporting that out of 53 major cancer studies this company tried to validate, they could only successfully reproduce 6 (11%). This paper sparked widespread media coverage and concern and since then this problem has come to be known as the “reproducibility crisis.” While this paper received the most attention, perhaps because the numbers are so dire, it was not the first or only paper to reveal this problem. Indeed Bayer had reported

similar findings in other areas of biomedical research, while non-profit reproducibility initiatives revealed the problem in

psychology and other fields, suggesting a systemic issue. This is worrisome, to say the least, because scientific research informs nearly all aspects of our lives, from how you raise your children to the drugs being developed for fatal diseases and if most work is not strong enough to be independently reproduced, we are wasting billions of dollars and impacting millions of lives. scite wants to fix this problem by introducing a system that identifies and promotes reliable research.

We do this by ingesting and analyzing millions of scientific articles, extracting the citation context, and then applying our deep learning models to identify citations as supporting, contradicting, or simply mentioning. In short, allowing anyone to see if a scientific article has been supported or contradicted.

As a funny, aside my co-founder, Yuri Lazebnik, and I first proposed that someone else, like Thomson Reuters, Elsevier, or NCBI should implement the approach used now by scite. After some waiting, we realized that if we wanted it to exist we would need to build it ourselves and here we are, five years later, with over 300M classified citations citing over 20M articles!

Tell us a little about how it works and the team behind it

As mentioned, scite is a tool that allows anyone to see if a scientific paper has been supported or contradicted by using a deep learning model to perform citation analysis at scale. In order to do this, we need to first extract citation statements from full-text scientific articles, which in most cases means extracting citation statements out of PDFs. To accomplish this, scite relies upon 11 different machine learning models with 20 to 30 features each. This is very challenging as there are thousands of citation styles and PDFs come in a variety of different formats and quality. We’re fortunate to have Patrice Lopez on the team, who has been developing the tool to accomplish this for over ten years. Once we’ve extracted the citations from the articles, we use a deep learning model to classify citations as supporting contradicting or mentioning.

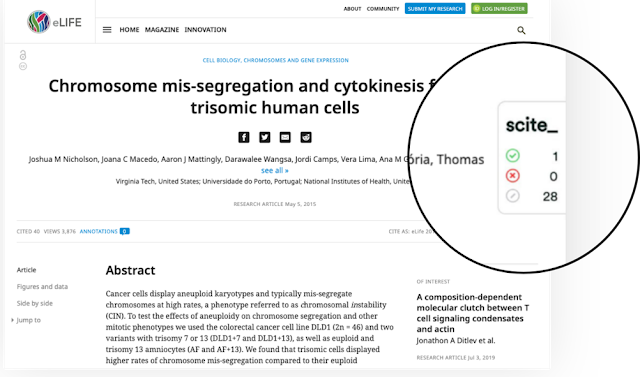

To show the utility of the tool, I like to show my PhD research as it is seen with and without the lens of scite. This

study looked at the effects of aneuploidy on chromosome mis-segregation, that is, if you add an extra chromosome to a cell does it make more mistakes during cell division. Our work was published in eLife and was a collaboration between our lab at Virginia Tech, and labs in Portugal, and at the NIH. It has been cited 40 times to date and viewed roughly 4,000 times. In general, these features are what we as a community look at when assessing a paper–who the authors are, the prestige of the journal it appears in, affiliations, and some metrics like citations and perhaps social media attention (Altmetrics). This information is used to decide if we want to read or cite a paper, if we want to promote this author, join their lab, or give them a grant. These are our proxies of quality. Yet, none of them have anything to do with quality. With scite, in just a few clicks,

you can see that my work has been independently supported by another lab (i.e. it has a supporting cite). To me, this is something like a super power for researchers, because without scite one would need to read forty papers to find this information or consult an expert and even then they might miss it.

To make scite happen requires a special team and I believe that is what we have created and continue to create at scite. I like to joke that scite is a multinational corporation with offices in Kentucky, Brooklyn, France, Germany, and Connecticut. While true, it is not an entirely accurate representation of the company, just as citation numbers are not an entirely accurate representation of a paper.

In fact, scite is a small team of six scientists and developers united not by geography but by a passion to make science more reliable.

In what ways do you think it demonstrates innovation?

The idea behind scite has been

discussed as early as the 1920’s, as there exists a similar system in law called Shepardizing (lawyers need to make sure they don’t cite overturned cases as they will quickly lose their argument this way). However, despite such discussions happening nearly a hundred years ago and multiple attempts to bring something like scite to fruition, even by juggernauts like Elseiver, it did not happen until scite came to life. scite is innovative in that in unlocks a tremendous wealth of information by successfully pushing the latest developments in technology to its limits. With that said, there is so much that we still need to do and we’re excited about the future and working with many stakeholders in the community.

What your plans for the future?

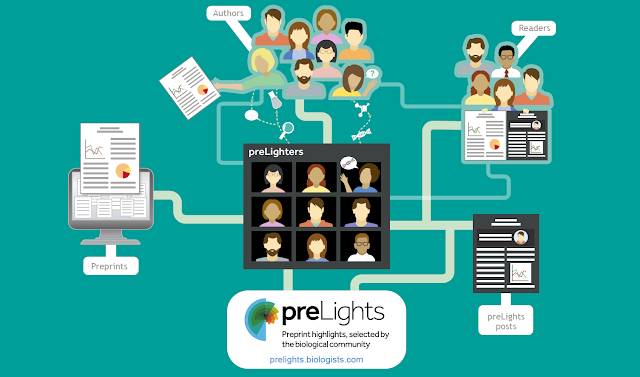

In the near future, we think anywhere there is scholarly metadata, there is an opportunity for scite to provide value. We are working with publishers to display scite badges, citation managers to display citation tallies, submission systems to implement citation screens and are in discussions with various pharmaceutical companies to help improve the efficiency of drug development.

Moreover, we will start to expand out citation analytics from articles, to people, to journals, and institutions.

Longer term, we envision scite as being the place where people and machines go to identify reliable research and researchers. We have plans to explore micro-publications, so as to offer more rapid feedback into our system, plans to further invest in machine learning to see if we can predict citation patterns as well as promising therapeutics in drug development, and I think much more that we can’t even predict right now. The scientific corpus is arguably the most important corpus in the world. It’s a shame that it is easier to text mine twitter than it is cancer research. However, it’s also an opportunity, one which we’re seizing now.

Josh Nicholson is co-founder and CEO of scite.ai, a deep learning platform that evaluates the reliability of scientific claims by citation analysis. Previously, he was founder and CEO of the Winnower (acquired 2016) and CEO of Authorea (acquired 2018 by Atypon), two companies aimed at improving how scientists publish and collaborate. He holds a PhD in cell biology from Virginia Tech, where his research focused on the effects of aneuploidy on chromosome segregation in cancer.

Websites

https://scite.ai/

Chrome plugin:

https://chrome.google.com/webstore/detail/scite/homifejhmckachdikhkgomachelakohh

Firefox plugin:

https://addons.mozilla.org/en-US/firefox/addon/scite/

Twitter:

@sciteai

See the ALPSP Awards for Innovation in Publishing Finalists lightning sessions at the ALPSP Conference on 11-13 September. The winners will be announced at the Dinner on 12 September.

The ALPSP Awards for Innovation in Publishing 2019 are sponsored by MPS Ltd.